[ps2id id=’data-collection-methods’ target=”/]

18. DATA COLLECTION METHODS

18a. Data collection methods

Plans for assessment and collection of outcome, baseline, and other trial data, including any related processes to promote data quality (e.g., duplicate measurements, training of assessors) and a description of study instruments (e.g., questionnaires, laboratory tests) along with their reliability and validity, if known. Reference to where data collection forms can be found, if not in the protocol.

Example 1

“Primary Outcome

Delirium Recognition: In accordance with national guidelines [Reference X], the study will identify delirium by using the RASS [Richmond Agitation-Sedation Scale] and the CAM-ICU [Confusion Assessment Method for the ICU] on all patients who are admitted directly from the emergency room or transferred from other services to the ICU [intensive care unit]. Such assessment will be performed after 24 hours of ICU admission and twice daily until discharge from the hospital . . . RASS has excellent inter-rater reliability among adult medical and surgical ICU patients and has excellent validity when compared to a visual analogue scale and other selected sedation scales [Reference X] . . . The CAM-ICU was chosen because of its practical use in the ICU wards, its acceptable psychometric properties, and based on the recommendation of national guidelines [Reference X] . . . The CAM-ICU diagnosis of delirium was validated against the DSM-III-R [Diagnostic and Statistical Manual of Mental Disorders, Third Edition – Revised] delirium criteria determined by a psychiatrist and found to have a sensitivity of 97% and a specificity of 92% [Reference X]. The CAM-ICU has been developed, validated and applied into ICU settings and multiple investigators have used the same method to identify patients with delirium [Reference X].

Delirium Severity: Since the CAM-ICU does not evaluate delirium severity, we selected the Delirium Rating Scale revised-1998 (DRS-R-98) [Reference X] developed by Dr. P.T. and colleagues. The DRS-R-98 was designed to evaluate the breadth of delirium symptoms for phenomenological studies in addition to measuring symptom severity with high sensitivity and specificity . . . The DRS-R-98 is a 16-item clinician-rated scale with anchored items descriptions . . . The DRS-R-98 has excellent inter-rater reliability (intra-class correlation 0.97) and internal consistency (Cronbach’s alpha 0.94) [Reference X].

Secondary Outcomes

The study will collect demographic and baseline functional information from the patient’s legally authorized representative and/or caregivers. Cognitive function status will be obtained by interviewing the patient’s legally authorized representative using the Informant Questionnaire on Cognitive Decline in the Elderly (IQCODE). IQCODE is a questionnaire that can be completed by a relative or other caregiver to determine whether that person has declined in cognitive functioning. The IQCODE lists 26 everyday situations . . . Each situation is rated by the informant for amount of change over the previous 10 years, using a Likert scale ranging from 1-much improved to 5-much worse. The IQCODE has a sensitivity between 69% to 100% and specificity of 80% to 96% for dementia [Reference X].

Utilizing the electronic medical record system (RMRS), we will collect several data points of interest at baseline and throughout the study period . . . We have previously defined hospital-related consequences to include: the number of patients with documented falls, use of physical restraints . . . These will be assessed using the RMRS, direct daily observation, and retrospective review of the electronic medical record. This definition of delirium-related hospital complications has been previously used and published [Reference X].”266

Example 2

“Training and Certification Plans

. . . Each center’s personnel will be trained centrally in the study requirements, standardized measurement of height, weight, and blood pressure, requirements for laboratory specimen collection including morning urine samples, counseling for adherence and the eliciting of information from study participants in a uniform reproducible manner.

. . . The data to be collected and the procedures to be conducted at each visit will be reviewed in detail. Each of the data collection forms and the nature of the required information will be discussed in detail on an item by item basis. Coordinators will learn how to code medications using the WHODrug software and how to code symptoms using the MedDRA software. Entering data forms, responding to data discrepancy queries and general information about obtaining research quality data will also be covered during the training session.

. . .

13.7. Quality Control of the Core Lab

Data from the Core Lab will be securely transmitted in batches and quality controlled in the same manner as Core Coordinating Center data; i.e. data will be entered and verified in the database on the Cleveland Clinic Foundation SUN with a subset later selected for additional quality control. Appropriate edit checks will be in place at the key entry (database) level.

The Core Lab is to have an internal quality control system established prior to analyzing any FSGS [Focal segmental glomerulosclerosis] samples. This system will be outlined in the Manual of Operations for the Core Lab(s) which is prepared and submitted by the Core Lab to the DCC [Data Coordinating Center] prior to initiating of the study.

At a minimum this system must include:

1) The inclusion of at least two known quality control samples; the reported measurements of the quality control samples must fall within specified ranges in order to be certified as acceptable.

2) Calibration at FDA [Food and Drug Administration] approved manufacturers’ recommended schedules.

13.8. Quality Control of the Biopsy Committee

The chair of the pathology committee will circulate to all of the study pathologist . . . samples [sic] biopsy specimens for evaluation after criteria to establish diagnosis of FSGS has been agreed. This internal review process will serve to ensure common criteria and assessment of biopsy specimens for confirmation of diagnosis of FSGS.”267

Explanation

The validity and reliability of trial data depend on the quality of the data collection methods. The processes of acquiring and recording data often benefit from attention to training of study personnel and use of standardised, pilot tested methods. These should be identical for all study groups, unless precluded by the nature of the intervention.

The choice of methods for outcome assessment can affect study conduct and results.268-273 Substantially different responses can be obtained for certain outcomes (e.g., harms) depending on who answers the questions (e.g., the participant or investigator) and how the questions are presented (e.g., discrete options or open ended).269;274-276 Also, when compared to paper-based data collection, the use of electronic handheld devices and Internet websites has the potential to improve protocol adherence, data accuracy, user acceptability, and timeliness of receiving data.268;270;271;277

The quality of data also depends on the reliability, validity, and responsiveness of data collection instruments such as questionnaires278 or laboratory instruments. Instruments with low inter-rater reliability will reduce statistical power,272 while those with low validity will not accurately measure the intended outcome variable. One study found that only 35% (47/133) of randomised trials in acute stroke used a measure with established reliability or validity.279 Modified versions of validated measurement tools may no longer be considered validated, and use of unpublished measurement scales can introduce bias and inflate treatment effect sizes.280

Standard processes should be implemented by local study personnel to enhance data quality and reduce bias by detecting and reducing the amount of missing or incomplete data, inaccuracies, and excessive variability in measurements.281-285 Examples include standardised training and testing of outcome assessors to promote consistency; tests of the validity or reliability of study instruments; and duplicate data measurements.

A clear protocol description of the data collection process – including the personnel, methods, instruments, and measures to promote data quality – can facilitate implementation and helps protocol reviewers to assess their appropriateness. Inclusion of data collection forms in the protocol (i.e., as appendices) is highly recommended, as the way in which data are obtained can substantially affect the results. If not included in the protocol, then a reference to where the forms can be found should be provided. If performed, pilot testing and assessment of reliability and validity of the forms should also be described. [ps2id id=’retention’ target=”/]

18b. Retention

Plans to promote participant retention and complete follow-up, including list of any outcome data to be collected for participants who discontinue or deviate from intervention protocols.

Example 1

“5.2.2 Retention

. . . As with recruitment, retention addresses all levels of participant.

At the parent and student level, study investigators and staff:

- Provide written feedback to all parents of participating students about the results of the “health screenings” . .

- Maintain interest in the study through materials and mailings . . .

- Send letters to parents and students prior to the final data collection, reminding them of the upcoming data collection and the incentives the students will receive.

At the school level, study investigators and staff:

- Provide periodic communications via newsletters and presentations to inform the school officials/staff, students, and parents about type 2 diabetes, the current status of the study, and plans for the next phase, as well as to acknowledge their support . . .

- Become a presence in the intervention schools to monitor and maintain consistency in implementation . . . be as flexible as possible with study schedule and proactive in resolving conflicts with schools.

- Provide school administration and faculty with the schedule or grid showing how the intervention fits into the school calendar . . .

- Solicit support from parents, school officials/staff, and teachers . . .

- Provide periodic incentives for school staff and teachers.

- Provide monetary incentives for the schools that increase with each year of the study . . .

[attr colspan=”3″]SCHOOL

Intervention School|* school program enhancement~~* PE [physical education] class equipment required to implement intervention~~* food service department to defray costs of nutrition intervention[attr width=”180″]|* $2000 in year 1, $3000 in year 2, $4000 in year 3~~

* ~$15,000 over 3 years~~ ~~ ~~* $3000/year

Control School|* school program enhancement|* $2000 in year 1, $4000 in year 2, $6000 in year 3[/table]

. . .

[attr colspan=”3″]STUDENT

All|* return consent form (signed or not)~~

* participation in health screening data collection measures and forms| * gift item worth ~ $5~~ ~~

* $50 baseline (6th grade), $10 interim (7th grade), $60 end of study (8th grade)

[attr colspan=”3″]FAMILY

Intervention Parents| * focus groups to provide input about family outreach events and activities|* $35/year per parent, up to 2 focus groups per field center, 6-10 participants per focus group[/table] “286 [Adapted from original table]Example 2“5.4 Infant Evaluations in the Case of Treatment Discontinuation or Study WithdrawalAll randomized infants completing the 18-month evaluation schedule will have fulfilled the infant clinical and laboratory evaluation requirements for the study . . .All randomized infants who are prematurely discontinued from study drug will be considered off study drug/on study and will follow the same schedule of events as those infants who continue study treatment except adherence assessment. All of these infants will be followed through 18 months as scheduled.

Randomized infants prematurely discontinued from the study before the 6-month evaluation will have the following clinical and laboratory evaluations performed, if possible: . . .

- Roche Amplicor HIV-1 DNA PCR [polymerase chain reaction] and cell pellet storage

- Plasma for storage (for NVP [nevirapine] resistance, HIV-1 RNA PCR and NVP concentration)

- . . .

Randomized infants prematurely discontinued from the study at any time after the 6-month evaluation will have the following clinical and laboratory evaluations performed, if possible:

. . .

5.5 Participant Retention

Once an infant is enrolled or randomized, the study site will make every reasonable effort to follow the infant for the entire study period . . . It is projected that the rate of loss-to-follow-up on an annual basis will be at most 5% . . . Study site staff are responsible for developing and implementing local standard operating procedures to achieve this level of follow-up.

5.6 Participant Withdrawal

Participants may withdraw from the study for any reason at any time. The investigator also may withdraw participants from the study in order to protect their safety and/or if they are unwilling or unable to comply with required study procedures after consultation with the Protocol Chair, National Institutes of Health (NIH) Medical Officers, Statistical and Data Management Center (SDMC) Protocol Statistician, and Coordinating and Operations Center (CORE) Protocol Specialist.

Participants also may be withdrawn if the study sponsor or government or regulatory authorities terminate the study prior to its planned end date.

Note: Early discontinuation of study product for any reason is not a reason for withdrawal from the study.”287

Explanation

Trial investigators must often seek a balance between achieving a sufficiently long follow-up for clinically relevant outcome measurement,122;288 and a sufficiently short follow-up to decrease attrition and maximise completeness of data collection. Non-retention refers to instances where participants are prematurely ‘off-study’ (i.e., consent withdrawn or lost to follow-up) and thus outcome data cannot be obtained from them. The majority of trials will have some degree of non-retention, and the number of these ‘off-study’ participants usually increases with the length of follow-up.116

It is desirable to plan ahead for how retention will be promoted in order to prevent missing data and avoid the associated complexities in both the study analysis (Item 20c) and interpretation. Certain methods can improve participant retention,67;152;289-292 such as financial reimbursement; systematic methods and reminders for contacting patients, scheduling appointments, and monitoring retention; and limiting participant burden related to follow-up visits and procedures (Item 13). A participant who withdraws consent for follow-up assessment of one outcome may be willing to continue with assessments for other outcomes, if given the option.

Non-retention should be distinguished from non-adherence.293 Non-adherence refers to deviation from intervention protocols (Item 11c) or from the follow-up schedule of assessments (Item 13), but does not mean that the participant is ‘off-study’ and no longer in the trial. Because missing data can be a major threat to trial validity and statistical power, non-adherence should not be an automatic reason for ceasing to collect data from the trial participant prior to study completion. In particular for randomised trials, it is widely recommended that all participants be included in an intention-to-treat analysis, regardless of adherence (Item 20c).

Protocols should describe any retention strategies and define which outcome data will be recorded from protocol non-adherers.152 Protocols should also detail any plans to record the reasons for non-adherence (e.g., discontinuation of intervention due to harms versus lack of efficacy) and non-retention (i.e., consent withdrawn; lost to follow-up), as this information can influence the handling of missing data and interpretation of results[ps2id id=’data-management’ target=”/].152;294;295

19. DATA MANAGEMENT

Plans for data entry, coding, security, and storage, including any related processes to promote data quality (e.g., double data entry; range checks for data values). Reference to where details of data management procedures can be found, if not in the protocol.

Example

“13.9.2. Data Forms and Data Entry

In the FSGS-CT [Focal Segmental Glomerulosclerosis – Clinical Trial], all data will be entered electronically. This may be done at a Core Coordinating Center or at the participating site where the data originated. Original study forms will be entered and kept on file at the participating site. A subset will be requested later for quality control; when a form is selected, the participating site staff will pull that form, copy it, and sent [sic] the copy to the DCC [Data Coordinating Center] for re-entry.

. . . Participant files are to be stored in numerical order and stored in a secure and accessible place and manner. Participant files will be maintained in storage for a period of 3 years after completion of the study.

13.9.3. Data Transmission and Editing

The data entry screens will resemble the paper forms approved by the Steering Committee. Data integrity will be enforced through a variety of mechanisms. Referential data rules, valid values, range checks, and consistency checks against data already stored in the database (i.e., longitudinal checks) will be supported. The option to chose [sic] a value from a list of valid codes and a description of what each code means will be available where applicable. Checks will be applied at the time of data entry into a specific field and/or before the data is written (committed) to the database. Modifications to data written to the database will be documented through either the data change system or an inquiry system. Data entered into the database will be retrievable for viewing through the data entry applications. The type of activity that an individual user may undertake is regulated by the privileges associated with his/her user identification code and password.

13.9.4. Data Discrepancy Inquiries and Reports to Core Coordinating Centers

Additional errors will be detected by programs designed to detect missing data or specific errors in the data. These errors will be summarized along with detailed descriptions for each specific problem in Data Query Reports, which will be sent to the Data Managers at the Core Coordinating Centers . . .

The Data Manager who receives the inquiry will respond by checking the original forms for inconsistency, checking other sources to determine the correction, modifying the original (paper) form entering a response to the query. Note that it will be necessary for Data Managers to respond to each inquiry received in order to obtain closure on the queried item.

The Core Coordinating Center and participating site personnel will be responsible for making appropriate corrections to the original paper forms whenever any data item is changed . . . Written documentation of changes will be available via electronic logs and audit trails.

. . .

Biopsy and biochemistry reports will be sent via e-mail when data are received from the Core Lab . . .

13.9.5. Security and Back-Up of Data

. . . All forms, diskettes and tapes related to study data will be kept in locked cabinets. Access to the study data will be restricted. In addition, Core Coordinating Centers will only have access to their own center’s data. A password system will be utilized to control access . . . These passwords will be changed on a regular basis. All reports prepared by the DCC will be prepared such that no individual subject can be identified.

A complete back up of the primary DCC database will be performed twice a month. These tapes will be stored off-site in a climate-controlled facility and will be retained indefinitely. Incremental data back-ups will be performed on a daily basis. These tapes will be retained for at least one week on-site. Back-ups of periodic data analysis files will also be kept. These tapes will be retained at the off-site location until the Study is completed and the database is on file with NIH [National Institutes of Health]. In addition to the system back-ups, additional measures will be taken to back-up and export the database on a regular basis at the database management level . . .

13.9.6. Study status reports

The DCC will send weekly email reports with information on missing data, missing forms, and missing visits. Personnel at the Core Coordinating Center and the Participating Sites should review these reports for accuracy and report any discrepancies to the DCC.

. . .

13.9.8. Description of Hardware at DCC

A SUN Workstation environment is maintained in the department with a SUN SPARCstation 10 model 41 as the server . . . Primary access to the departments [sic] computing facilities will be through the Internet . . . For maximum programming efficiency, the Oracle database management system and the SAS and BMDP statistical analysis systems will be employed for this study . . .

Oracle facilitates sophisticated integrity checks through a variety of mechanisms including stored procedures, stored triggers, and declarative database integrity–for between table verifications. Oracle allows data checks to be programmed once in the database rather than repeating the same checks among many applications . . . Security is enforced through passwords and may be assigned at different levels to groups and individuals.”267

Explanation

Careful planning of data management with appropriate personnel can help to prevent flaws that compromise data validity. The protocol should provide a full description of the data entry and coding processes, along with measures to promote their quality, or provide key elements and a reference to where full information can be found. These details are particularly important for the primary outcome data. The protocol should also document data security measures to prevent unauthorised access to or loss of participant data, as well as plans for data storage (including timeframe) during and after the trial. This information facilitates an assessment of adherence to applicable standards and regulations.

Differences in data entry methods can affect the trial in terms of data accuracy,268 cost, and efficiency.271 For example, when compared with paper case report forms, electronic data capture can reduce the time required for data entry, query resolution, and database release by combining data entry with data collection (Item 18a).271;277 When data are collected on paper forms, data entry can be performed locally or at a central site. Local data entry can enable fast correction of missing or inaccurate data, while central data entry facilitates blinding (masking), standardisation, and training of a core group of data entry personnel.

Raw, non-numeric data are usually coded for ease of data storage, review, tabulation, and analysis. It is important to define standard coding practices to reduce errors and observer variation. When data entry and coding are performed by different individuals, it is particularly important that the personnel use unambiguous, standardised terminology and abbreviations to avoid misinterpretation.

As with data collection (Item 18a), standard processes are often implemented to improve the accuracy of data entry and coding.281;284 Common examples include double data entry296; verification that the data are in the proper format (e.g., integer) or within an expected range of values; and independent source document verification of a random subset of data to identify missing or apparently erroneous values. Though widely performed to detect data entry errors, the time and costs of independent double data entry from paper forms need to be weighed against the magnitude of reduction in error rates compared to single-data entry[ps2id id=’outcomes’ target=”/].297-299

20. STATISTICAL METHODS

20a. Outcomes

Statistical methods for analysing primary and secondary outcomes. Reference to where other details of the statistical analysis plan can be found, if not in the protocol.

Example

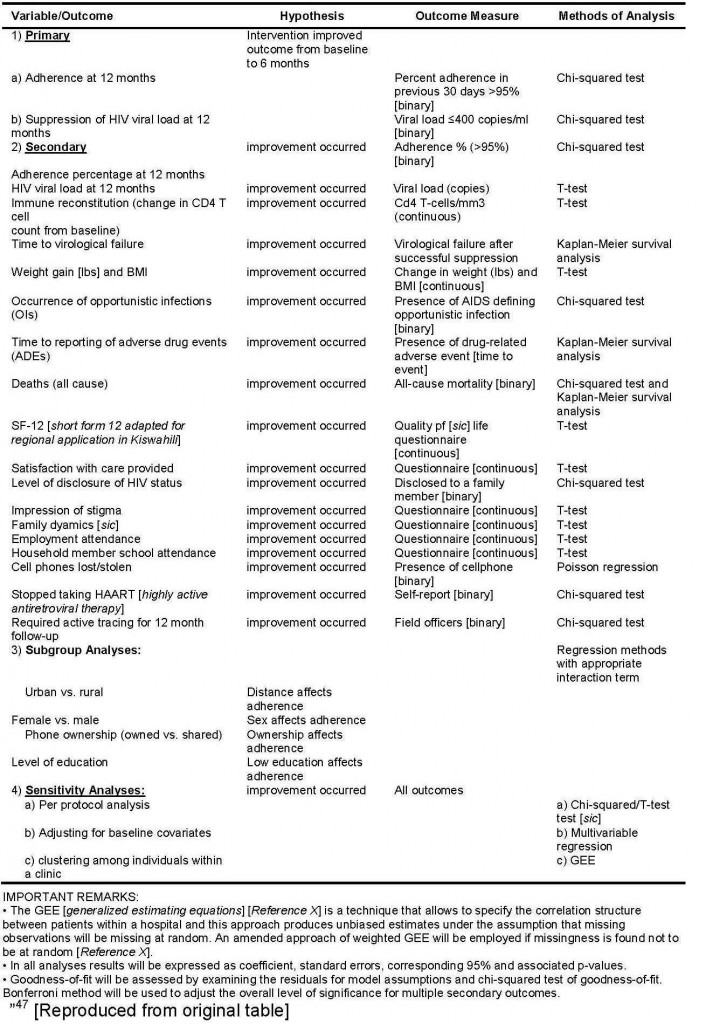

“The intervention arm (SMS [short message system (text message)]) will be compared against the control (SOC [standard of care]) for all primary analysis. We will use chi-squared test for binary outcomes, and T-test for continuous outcomes. For subgroup analyses, we will use regression methods with appropriate interaction terms (respective subgroup × treatment group). Multivariable analyses will be based on logistic regression . . . for binary outcomes and linear regression for continuous outcomes. We will examine the residual to assess model assumptions and goodness-of-fit. For timed endpoints such as mortality we will use the Kaplan-Meier survival analysis followed by multivariable Cox proportional hazards model for adjusting for baseline variables. We will calculate Relative Risk (RR) and RR Reductions (RRR) with corresponding 95% confidence intervals to compare dichotomous variables, and difference in means will be used for additional analysis of continuous variables. P-values will be reported to four decimal places with p-values less than 0.001 reported as p < 0.001. Up-to-date versions of SAS (Cary, NC) and SPSS (Chicago, IL) will be used to conduct analyses. For all tests, we will use 2-sided p-values with alpha = < 0.05 level of significance. We will use the Bonferroni method to appropriately adjust the overall level of significance for multiple primary outcomes, and secondary outcomes.

To assess the impact of potential clustering for patients cared by the same clinic, we will use generalized estimating equations [GEE] assuming an exchangeable correlation structure. Table 3 provides a summary of methods of analysis for each variable. Professional academic statisticians (LT, RN) blinded to study groups will conduct all analyses.

Table 3: Variables, Measures and Methods of Analysis

Explanation

The protocol should indicate explicitly each intended analysis comparing study groups. An unambiguous, complete, and transparent description of statistical methods facilitates execution, replication, critical appraisal, and the ability to track any changes from the original pre-specified methods.

Results for the primary outcome can be substantially affected by the choice of analysis methods. When investigators apply more than one analysis strategy for a specific primary outcome, there is potential for inappropriate selective reporting of the most interesting result.6 The protocol should pre-specify the main (“primary”) analysis of the primary outcome (Item 12), including the analysis methods to be used for statistical comparisons (Items 20a and 20b); precisely which trial participants will be included (Item 20c); and how missing data will be handled (Item 20c). Additionally, it is helpful to indicate the effect measure (e.g., relative risk) and significance level that will be used, as well as the intended use of confidence intervals when presenting results.

The same considerations will often apply equally to pre-specified secondary and exploratory outcomes. In some instances, descriptive approaches to evaluating rare outcomes – such as adverse events – might be preferred over formal analysis given the lack of power.300 Adequately powered analyses may require pre-planned meta-analyses with results from other studies.

Most trials are affected to some extent by multiplicity issues.301;302 When multiple statistical comparisons are performed (e.g., multiple study groups, outcomes, interim analyses), the risk of false positive (type 1) error is inflated and there is increased potential for selective reporting of favourable comparisons in the final trial report. For trials with more than two study groups, it is important to specify in the protocol which comparisons (of two or more study groups) will be performed and, if relevant, which will be the main comparison of interest. The same principle of specifying the main comparison also applies when there is more than one outcome, including when the same variable is measured at several time points (Item 12). Any statistical approaches to account for multiple comparisons and time points should also be described.

Finally, different trial designs dictate the most appropriate analysis plan and any additional relevant information that should be included in the protocol. For example, cluster, factorial, crossover, and within-person randomised trials require specific statistical considerations, such as how clustering will be handled in a cluster randomised trial.[ps2id id=’additional-analyses’ target=”/]

20b. Additional analyses

Methods for any additional analyses (e.g., subgroup and adjusted analyses).

Example 1

“We plan to conduct two subgroup analyses, both with strong biological rationale and possible interaction effects. The first will compare hazard ratios of re-operation based upon the degree of soft tissue injury (Gustilo-Anderson Type I/II open fractures vs. Gustilo-Anderson Type IIIA/B open fractures). The second will compare hazard ratios of re-operation between fractures of the upper and lower extremity. We will test if the treatment effects differ with fracture types and extremities by putting their main effect and interaction terms in the Cox regression. For the comparison of pressure, we anticipate that the low/gravity flow will be more effective in the Type IIIA-B open fracture than in the Type I/II open fracture, and be more effective in the upper extremity than the lower extremity. For the comparison of solution, we anticipate that soap will do better in the Type IIIA-B open fracture than in the Type I/II open fracture, and better in the upper extremity than the lower extremity.”303

Example 2

“A secondary analysis of the primary endpoint will adjust for those pre-randomization variables which might reasonably be expected to be predictive of favorable outcomes. Generalized linear models will be used to model the proportion of subjects with neurologically intact (MRS ≤ 3 [Modified Rankin Score]) survival to hospital discharge by ITD [impedance threshold device]/sham device group adjusted for site (dummy variables modeling the 11 ROC [Resuscitation Outcomes Consortium] sites), patient sex, patient age (continuous variable), witness status (dummy variables modeling the three categories of unwitnessed arrest, non-EMS [emergency medical services] witnessed arrest, and EMS witnessed arrest), location of arrest (public versus non-public), time or response (continuous variable modeling minutes between call to 911 and arrival of EMS providers on scene), presenting rhythm (dummy variables modeling asystole, PEA [pulseless electrical activity], VT/VF [ventricular tachycardia/fibrillation], or unknown), and treatment assignment in the Analyze Late vs. Analyze Early intervention. The test statistic used to assess any benefit of the ITD relative to the sham device will be computed as the generalized linear model regression coefficient divided by the estimated “robust” standard error based on the Huber- White sandwich estimator[Reference X] in order to account for within group variability which might depart from the classical assumptions. Statistical inference will be based on one-sided P values and 95% confidence intervals which adjust for the stopping rule used for the primary analysis.”304

Explanation

Subgroup analysis

Subgroup analyses explore whether estimated treatment effects vary significantly between subcategories of trial participants. As these data can help tailor healthcare decisions to individual patients, a modest number of pre-specified subgroup analyses can be sensible.

However, subgroup analyses are problematic if they are inappropriately conducted or selectively reported. Subgroup analyses described in protocols or grant applications do not match those reported in subsequent publications for more than two-thirds of randomised trials, suggesting that subgroup analyses are often selectively reported or not pre-specified.6;7;305 Post hoc (data-driven) analyses have a high risk of spurious findings and are discouraged.306 Conducting a large number of subgroup comparisons leads to issues of multiplicity, even when all of the comparisons have been pre-specified. Furthermore, when subgroups are based on variables measured after randomisation, the analyses are particularly susceptible to bias.307

Pre-planned subgroup analyses should be clearly specified in the protocol with respect to the precise baseline variables to be examined, the definition of the subgroup categories (including cut-off boundaries for continuous or ordinal variables), the statistical method to be used, and the hypothesized direction of the subgroup effect based on plausibility.308;309

Adjusted analysis

Some trials pre-specify adjusted analyses to account for imbalances between study groups (e.g., chance imbalance across study groups in small trials), improve power, or account for a known prognostic variable. Adjustment is often recommended for any variables used in the allocation process (e.g., in stratified randomisation), on the principle that the analysis strategy should match the design.310 Most trial protocols and publications do not adequately address issues of adjustment, particularly the description of variables.6;310

It is important that trial investigators indicate in the protocol if there is an intention to perform or consider adjusted analyses, explicitly specifying any variables for adjustment and how continuous variables will be handled. When both unadjusted and adjusted analyses are intended, the main analysis should be identified (Item 20a). It may not always be clear, in advance, which variables will be important for adjustment. In such situations, the objective criteria to be used to select variables should be pre-specified. As with subgroup analyses, adjustment variables based on post-randomisation data rather than baseline data can introduce bias[ps2id id=’analysis-population-and-missing-data’ target=”/].311;312

20c. Analysis population and missing data

Definition of analysis population relating to protocol non-adherence (e.g., as randomised analysis), and any statistical methods to handle missing data (e.g., multiple imputation).

Example

“Nevertheless, we propose to test non-inferiority using two analysis sets; the intention-to-treat set, considering all patients as randomized regardless of whether they received the randomized treatment, and the “per protocol” analysis set. Criteria for determining the “per protocol” group assignment would be established by the Steering Committee and approved by the PSMB [Performance and Safety Monitoring Board] before the trial begins. Given our expectation that very few patients will crossover or be lost to follow-up, these analyses should agree very closely. We propose declaring medical management non-inferior to interventional therapy, only if shown to be non-inferior using both the “intention to treat” and “per protocol” analysis sets.

. . .

10.4.7 Imputation Procedure for Missing Data

While the analysis of the primary endpoint (death or stroke) will be based on a log-rank test and, therefore, not affected by patient withdrawals (as they will be censored) provided that dropping out is unrelated to prognosis; other outcomes, such as the Rankin Score at five years post-randomization, could be missing for patients who withdraw from the trial. We will report reasons for withdrawal for each randomization group and compare the reasons qualitatively . . . The effect that any missing data might have on results will be assessed via sensitivity analysis of augmented data sets. Dropouts (essentially, participants who withdraw consent for continued follow-up) will be included in the analysis by modern imputation methods for missing data.

The main feature of the approach is the creation of a set of clinically reasonable imputations for the respective outcome for each dropout. This will be accomplished using a set of repeated imputations created by predictive models based on the majority of participants with complete data. The imputation models will reflect uncertainty in the modeling process and inherent variability in patient outcomes, as reflected in the complete data.

After the imputations are completed, all of the data (complete and imputed) will be combined and the analysis performed for each imputed-and-completed dataset. Rubin’s method of multiple (i.e., repeated) imputation will be used to estimate treatment effect. We propose to use 15 datasets (an odd number to allow use of one of the datasets to represent the median analytic result).

These methods are preferable to simple mean imputation, or simple “best-worst” or “worst-worst” imputation, because the categorization of patients into clinically meaningful subgroups, and the imputation of their missing data by appropriately different models, accords well with best clinical judgment concerning the likely outcomes of the dropouts, and therefore will enhance the trial’s results.”313

Explanation

In order to preserve the unique benefit of randomisation as a mechanism to avoid selection bias, an “as randomised” analysis retains participants in the group to which they were originally allocated. To prevent attrition bias, outcome data obtained from all participants are included in the data analysis, regardless of protocol adherence (Items 11c and 18b).249;250 These two conditions (i.e., all participants, as randomised) define an “intention to treat” analysis, which is widely recommended as the preferred analysis strategy.17

Some trialists use other types of data analyses (commonly labelled as “modified intention to treat” or “per protocol”) that exclude data from certain participants – such as those who are found to be ineligible after randomisation or who deviate from the intervention or follow-up protocols. This exclusion of data from protocol non-adherers can introduce bias, particularly if the frequency of and the reasons for non-adherence vary between the study groups.314;315 In some trials, the participants to be included in the analysis will vary by outcome – for example, analysis of harms (adverse events) is sometimes restricted to participants who received the intervention, so that absence or occurrence of harm is not attributed to a treatment that was never received.

Protocols should explicitly describe which participants will be included in the main analyses (e.g., all randomised participants, regardless of protocol adherence) and define the study group in which they will be analysed (e.g., as-randomised). In one cohort of randomised trials approved in 1994-5, this information was missing in half of the protocols.6 The ambiguous use of labels such as “intention to treat” or “per protocol” should be avoided unless they are fully defined in the protocol.6;314 Most analyses labelled as “intention to treat” do not actually adhere to its definition because of missing data or exclusion of participants who do not meet certain post-randomisation criteria (e.g., specific level of adherence to intervention).6;316 Other ambiguous labels such as “modified intention to treat” are also variably defined from one trial to another.314

In addition to defining the analysis population, it is necessary to address the issue of missing data in the protocol. Most trials have some degree of missing data,317;318 which can introduce bias depending on the pattern of missingness (e.g., not missing at random). Strategies to maximise follow-up and prevent missing data, as well as the recording of reasons for missing data, are thus important to develop and document (Item 18b).152

The protocol should also state how missing data will be handled in the analysis and detail any planned methods to impute (estimate) missing outcome data, including which variables will be used in the imputation process (if applicable).152 Different statistical approaches can lead to different results and conclusions,317;319 but one study found that only 23% of trial protocols specified the planned statistical methods to account for missing data.6

Imputation of missing data allows the analysis to conform to intention to treat analysis but requires strong assumptions that are untestable and may be hard to justify.152;318;320;321 Methods of multiple imputation are more complex but are widely preferred to single imputation methods (e.g., last observation carried forward; baseline observation carried forward), as the latter introduce greater bias and produce confidence intervals that are too narrow.152;320-322 Specific issues arise when outcome data are missing for crossover or cluster randomised trials.323 Finally, sensitivity analyses are highly recommended to assess the robustness of trial results under different methods of handling missing data.152;324